Featured Post

Nearest Addition Algorithm Is Closely Related To Select One

- Get link

- X

- Other Apps

Where k value is 1 k 1. In Section 3 we first formally define the studied problem ie one-class collaborative filtering and then present our solution k-reciprocal nearest neighbors algorithm k-RNN in detail.

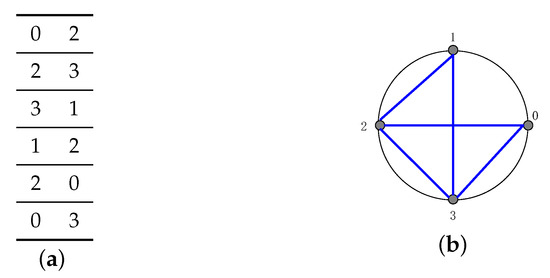

Symmetry Free Full Text Data Hiding In Symmetric Circular String Art Html

Symmetry Free Full Text Data Hiding In Symmetric Circular String Art Html

An average of missing data variables was derived from the kNNs and used for each missing value Batista and.

Nearest addition algorithm is closely related to select one. Click Save How to check cookies are enabled for apple platforms Microsoft Internet Explorer 50 on OSX 1. The algorithm k-nearest neighbors is used for classification of objects according to the closest training samples in the space defined by the characteristics and is one of the simplest algorithms. Move to the nearest unvisited vertex the edge with smallest weight.

In Section 2 we discuss some closely related works. Initialize the set of active clusters to consist of n one-point clusters one for each. The kNN imputation method uses the kNN algorithm to search the entire data set for the k number of most similar cases or neighbors that show the same patterns as the row with missing data.

The algorithm uses feature similarity to predict the values of any new data points. Addition usually signified by the plus symbol is one of the four basic operations of arithmetic the other three being subtraction multiplication and divisionThe addition of two whole numbers results in the total amount or sum of those values combined. This means that the new point is assigned a value based on how closely it resembles the points in the training set.

In other words heuristic algorithms are fast but are not guaranteed to produce the optimal circuit. Similarity is defined according to a distance metric between two data points. In addition the algorithm applies the concept of mechanical work to produce a feasible ie no collision route with the optimum path quality.

Berkman Schieber Vishkin 1993 who first identified the procedure as a useful subroutine. The k nearest neighbours is an algorithm that is used for simple classification. In this case new data point target class will be assigned to the 1 st closest neighbor.

Python is the go-to programming language for machine learning so what better way to discover kNN than with Pythons famous packages NumPy and scikit-learn. Select 13 of the first path segment nearest to its end point. The example in the adjacent image shows a combination of three apples and two apples making a total of five apples.

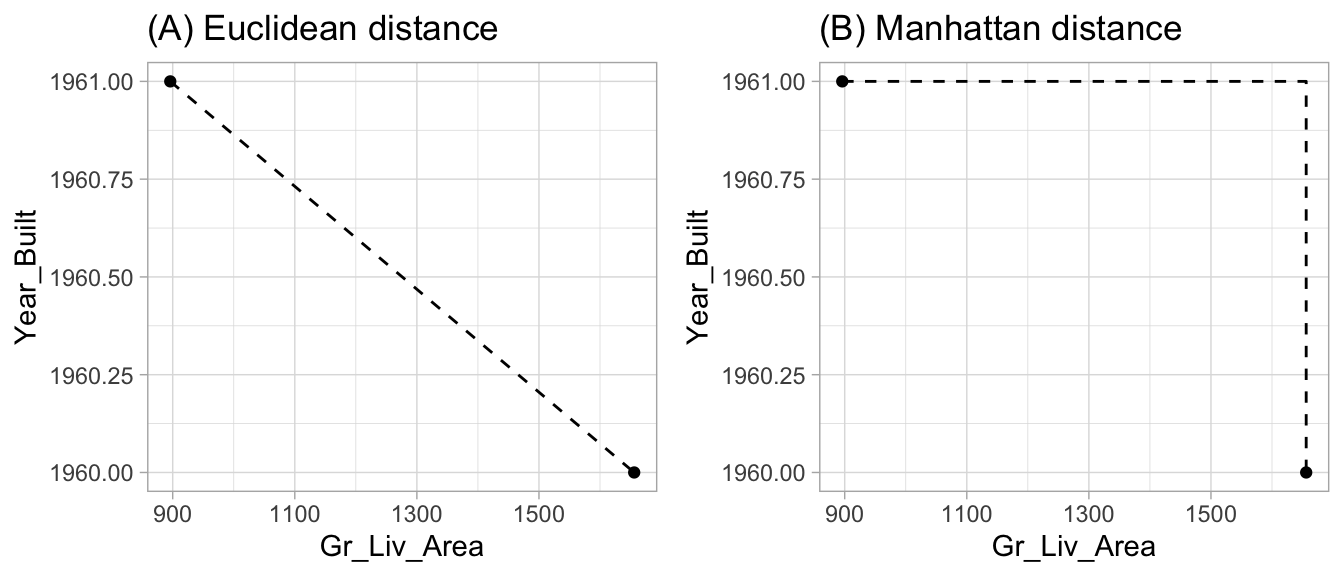

Intuitively the nearest neighbor chain algorithm repeatedly follows a chain of clusters A B C. A popular choice is the Euclidean distance given by. This problem can be solved efficiently both by parallel and non-parallel algorithms.

For each position in a sequence of numbers search among the previous positions for the last position that contains a smaller value. Mazes containing no loops are known as simply connected or perfect mazes and are equivalent to a tree in graph theory. The algorithm quickly yields a short tour but usually not the optimal one.

How to choose the value of K. In this tutorial youll get a thorough introduction to the k-Nearest Neighbors kNN algorithm in Python. Passalacqua in Biological Distance Analysis 2016 k-Nearest Neighbor.

Selecting the value of K in K-nearest neighbor is the most critical problem. We organize the rest of the paper as follows. In computer science locality-sensitive hashing is an algorithmic technique that hashes similar input items into the same buckets with high probability.

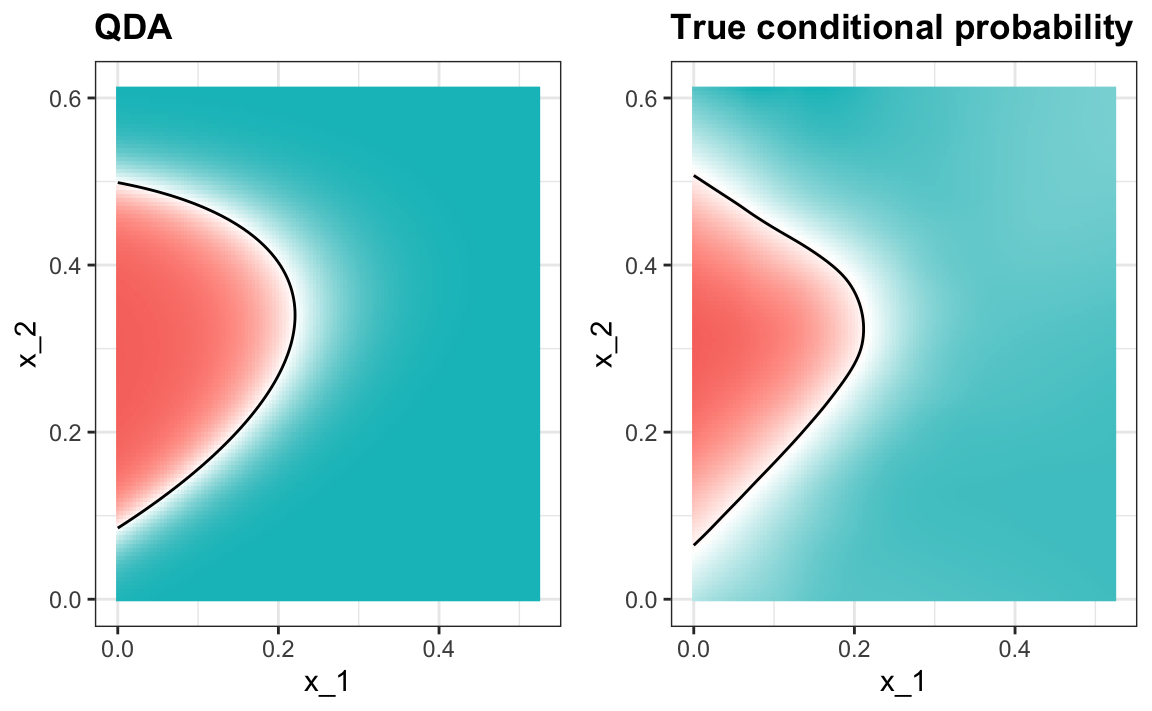

The -nearest neighbor kNN rule is a classical non-parametric classification algorithm in pattern recognition and has been widely used in many fields due to its simplicity effectiveness and intuitiveness. In this paper we essentially resolve the is-sue by providing an algorithm with query time dnρc using space dnn1ρc where ρc 1c2 Ologlognlog13 n. One of the most important aspects of the learning process is the assessment of the knowledge acquired by the learner.

Nearest Neighbor Algorithm. Thus many maze-solving algorithms are closely related to graph theoryIntuitively if one pulled and stretched out the paths in the maze in the proper way the result could be made to. Where each cluster is the nearest neighbor of the previous one until reaching a pair of clusters that are mutual nearest neighbors.

A small value of K means that noise will have a higher influence on the result. Nearest Neighbor Algorithm NNA Select a starting point. It differs from conventional hashing techniques in that hash collisions are maximized not minimized.

While the other paths pass too closely to one or both obstacles especially near the corners. In more detail the algorithm performs the following steps. In that problem the salesman starts at a random city and repeatedly visits the nearest city until all have been visited.

The nearest neighbour algorithm was one of the first algorithms used to solve the travelling salesman problem approximately. Repeat until the circuit is complete. Select Security and check the option that says Block third-party and advertising cookies 3.

Select the cog icon from the top menu of your browser and then select Preferences 2. Nearest neighbor is a special case of k-nearest neighbor class. In a typical classroom assessment eg an exam assignment or quiz an instructor or a grader provides students with feedback on their answers to questions related to the subject matter.

In the classification setting the K-nearest neighbor algorithm essentially boils down to forming a majority vote between the K most similar instances to a given unseen observation. Since similar items end up in the same buckets this technique can be used for data clustering and nearest neighbor search. Consider our earlier graph from Example123 shown below.

The kNN algorithm is one of the most famous machine learning algorithms and an absolute must-have in your machine learning toolbox. Motivated by the fact that an algorithm with such exponent exists for the closely related problem of finding the furthest neighbor 20. In computer science the all nearest smaller values problem is the following task.

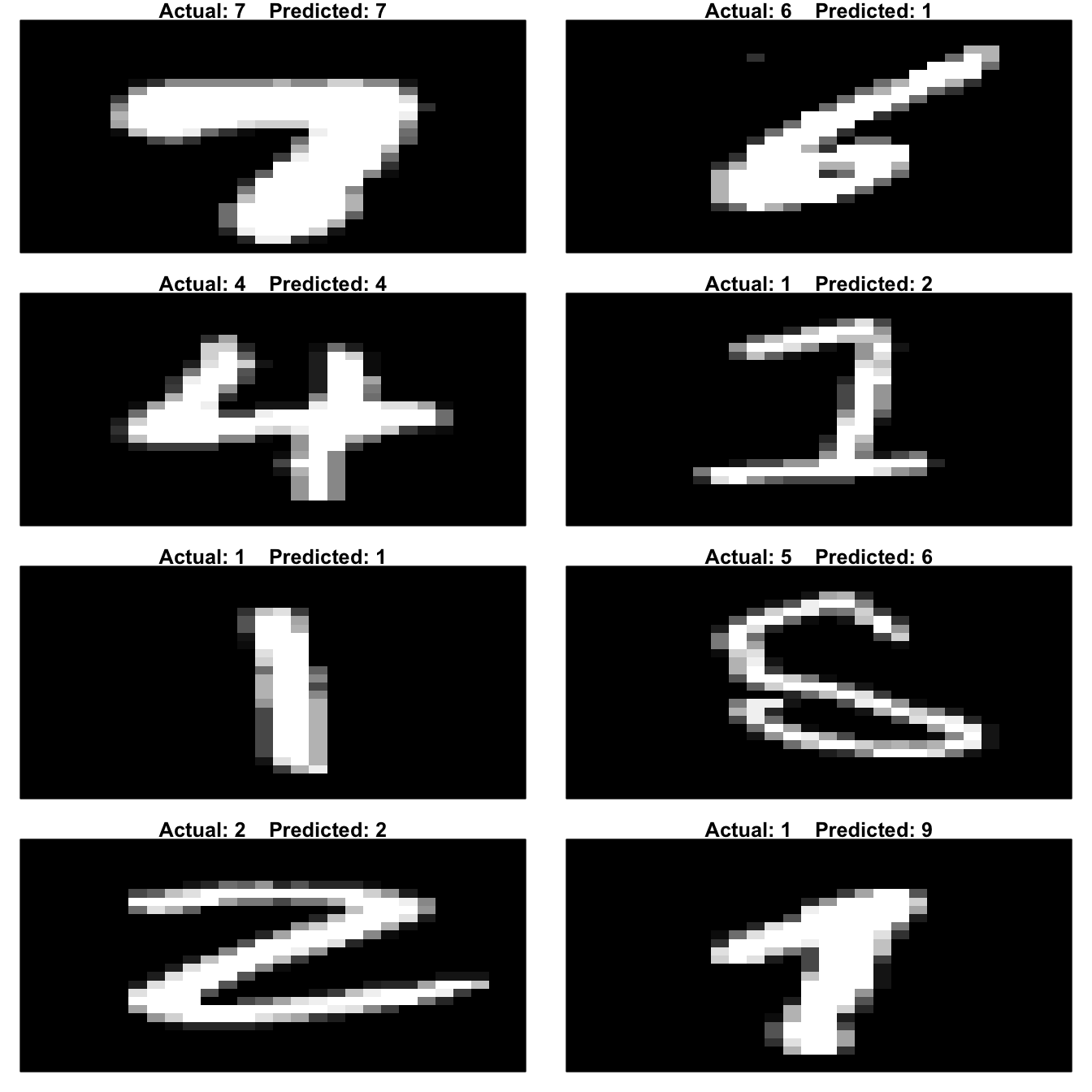

Chapter 31 Examples Of Algorithms Introduction To Data Science

Chapter 31 Examples Of Algorithms Introduction To Data Science

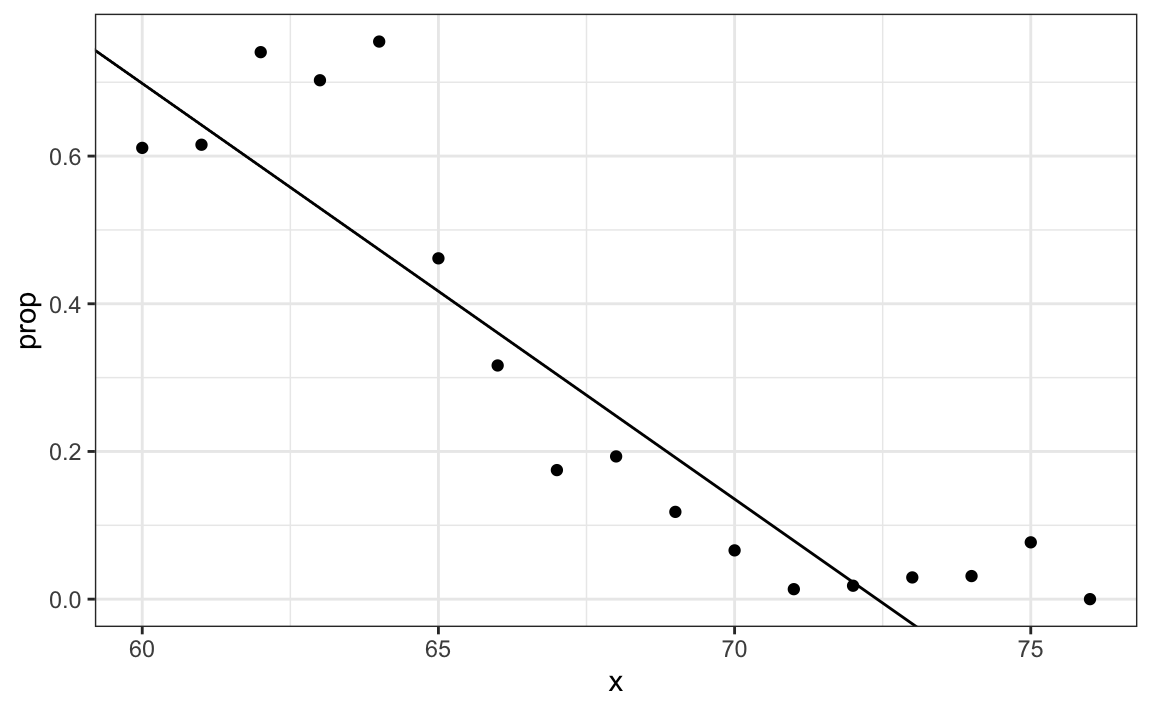

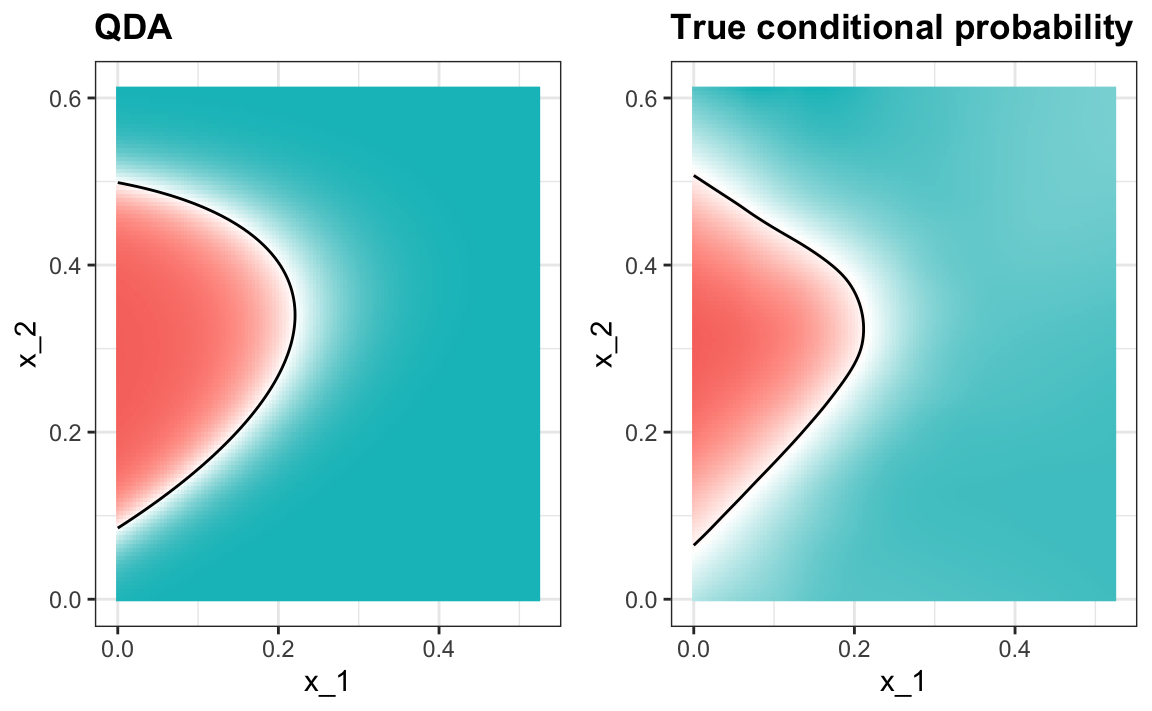

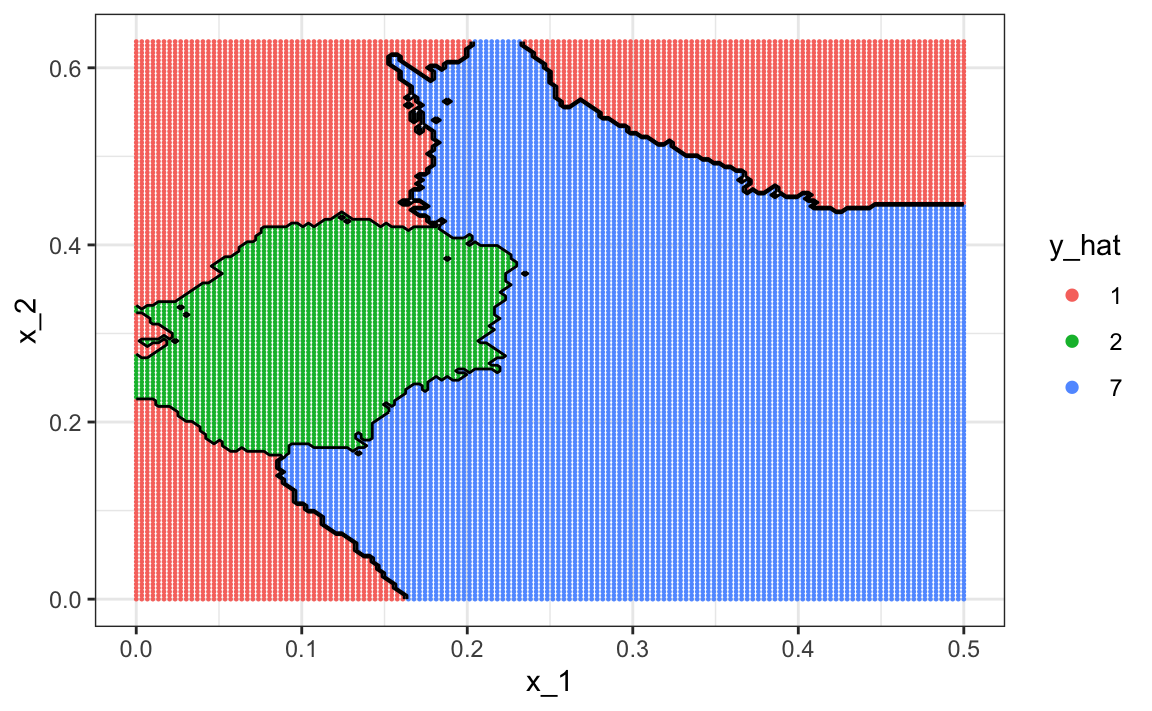

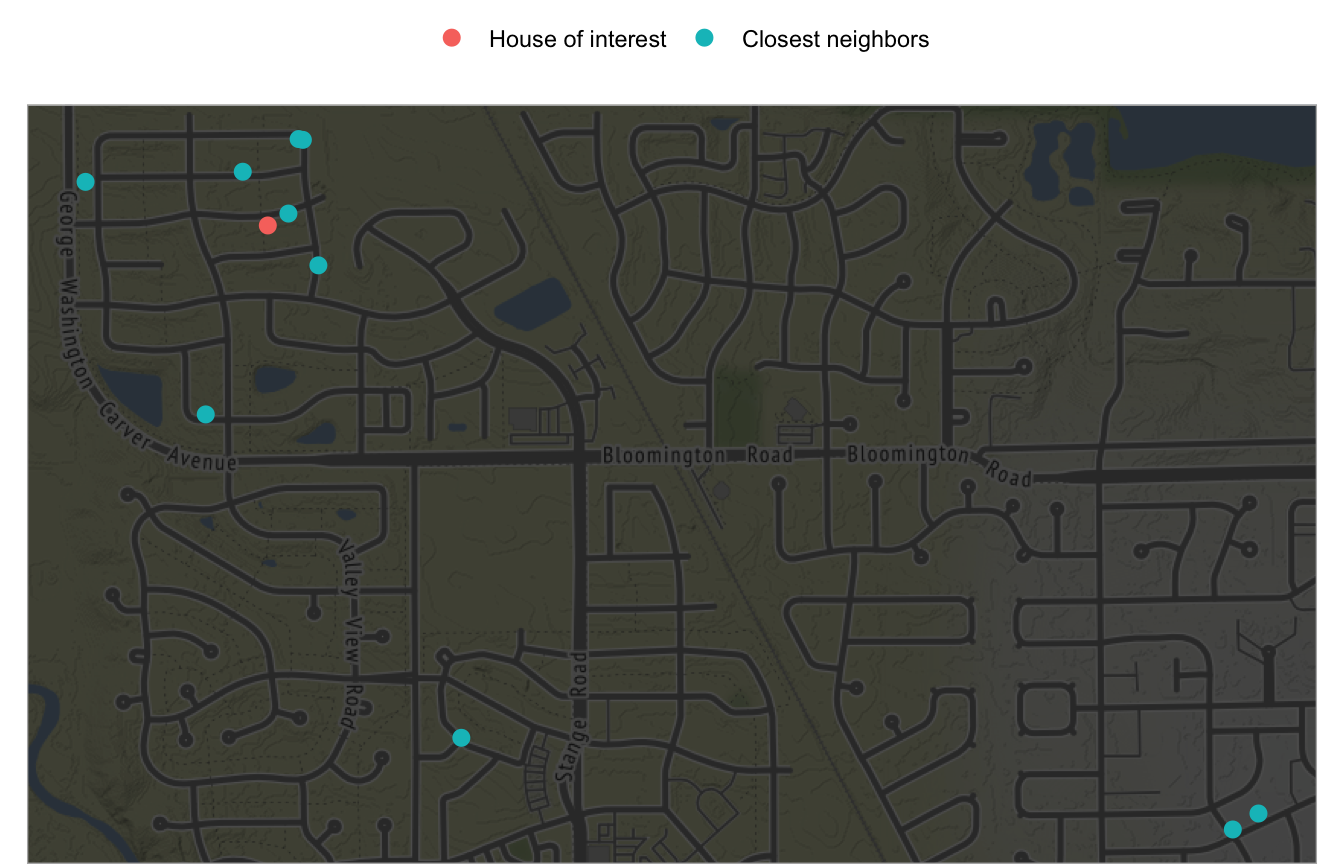

Chapter 8 K Nearest Neighbors Hands On Machine Learning With R

Chapter 8 K Nearest Neighbors Hands On Machine Learning With R

Pin On Environment Science Climate Change Impact

Pin On Environment Science Climate Change Impact

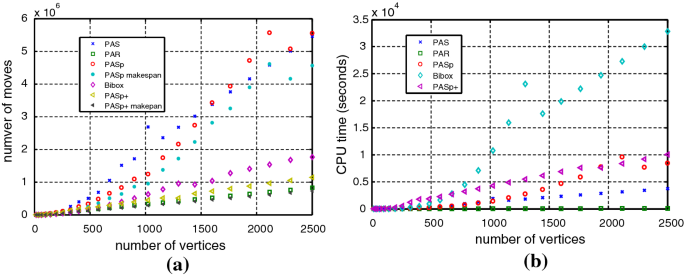

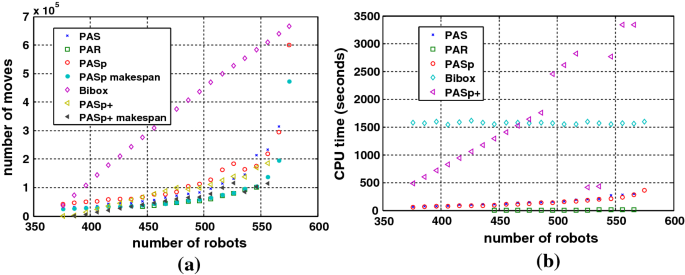

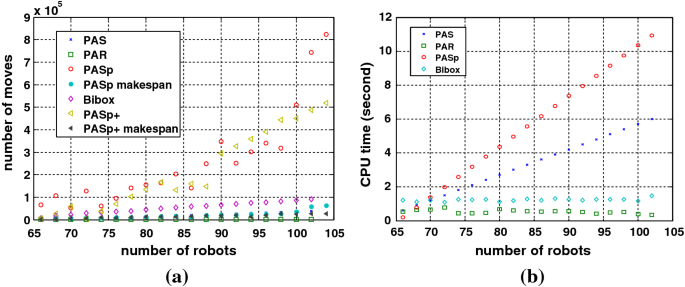

A Complete Multi Robot Path Planning Algorithm Springerlink

A Complete Multi Robot Path Planning Algorithm Springerlink

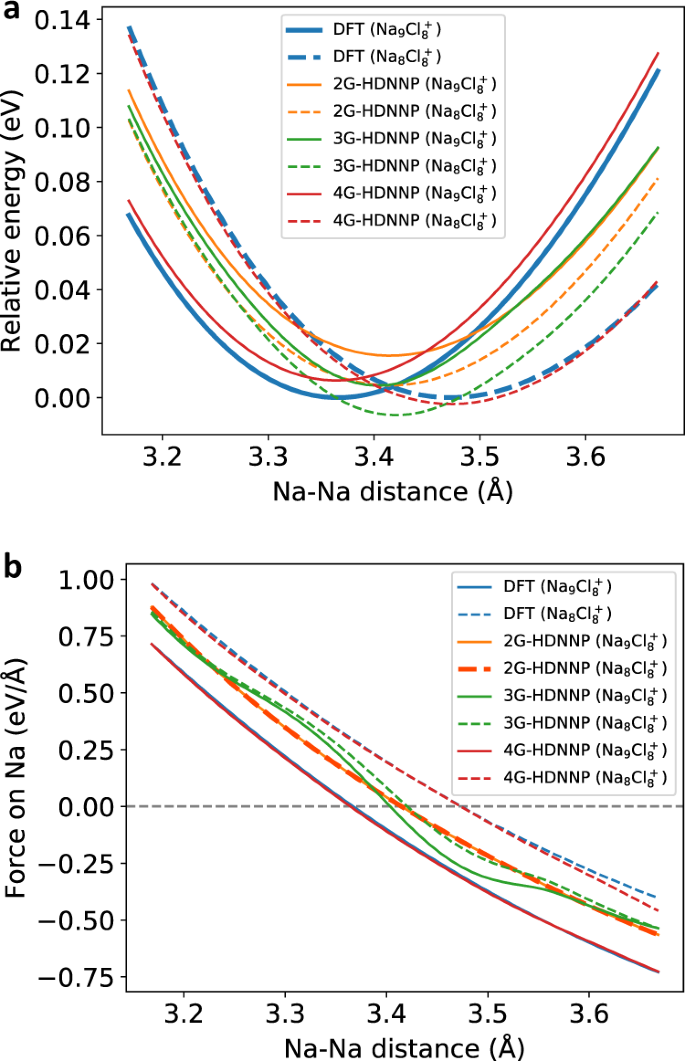

A Fourth Generation High Dimensional Neural Network Potential With Accurate Electrostatics Including Non Local Charge Transfer Nature Communications

A Fourth Generation High Dimensional Neural Network Potential With Accurate Electrostatics Including Non Local Charge Transfer Nature Communications

A Complete Multi Robot Path Planning Algorithm Springerlink

A Complete Multi Robot Path Planning Algorithm Springerlink

Chapter 31 Examples Of Algorithms Introduction To Data Science

Chapter 31 Examples Of Algorithms Introduction To Data Science

200 Machine Learning Interview Questions And Answer For 2021

Chapter 8 K Nearest Neighbors Hands On Machine Learning With R

Chapter 8 K Nearest Neighbors Hands On Machine Learning With R

Chapter 8 K Nearest Neighbors Hands On Machine Learning With R

Chapter 8 K Nearest Neighbors Hands On Machine Learning With R

Chapter 31 Examples Of Algorithms Introduction To Data Science

Chapter 31 Examples Of Algorithms Introduction To Data Science

A Complete Multi Robot Path Planning Algorithm Springerlink

A Complete Multi Robot Path Planning Algorithm Springerlink

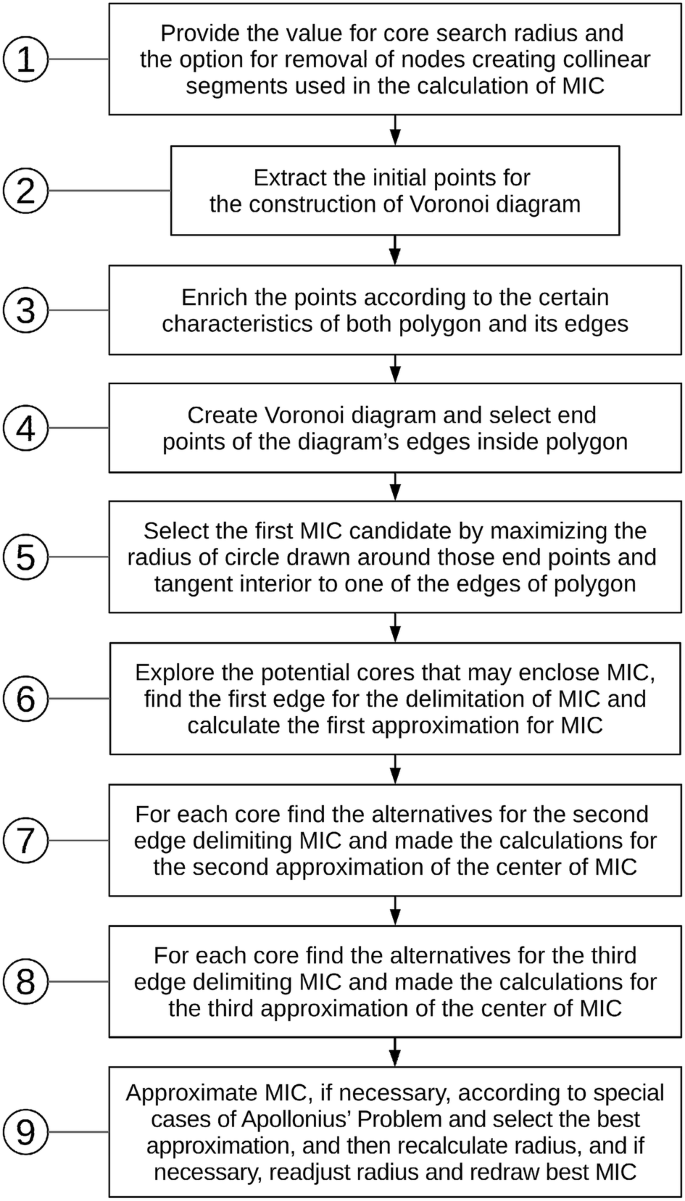

An Algorithm For Maximum Inscribed Circle Based On Voronoi Diagrams And Geometrical Properties Springerlink

An Algorithm For Maximum Inscribed Circle Based On Voronoi Diagrams And Geometrical Properties Springerlink

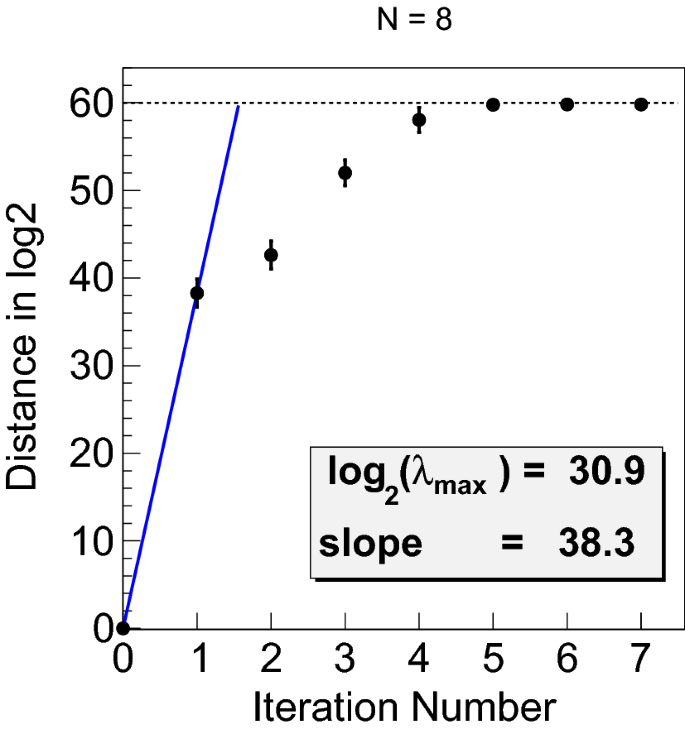

Review Of High Quality Random Number Generators Springerlink

Review Of High Quality Random Number Generators Springerlink

A Complete Multi Robot Path Planning Algorithm Springerlink

A Complete Multi Robot Path Planning Algorithm Springerlink

China Mobile Browser Market Share Browser Share Market Web Browser

China Mobile Browser Market Share Browser Share Market Web Browser

Scatter Plots A Complete Guide To Scatter Plots

Scatter Plots A Complete Guide To Scatter Plots

- Get link

- X

- Other Apps